AI Companions Are Replacing Teen Friendships and It’s Time to Set Hard Boundaries

From sexually explicit bots to school-burning pandas, AI “friends” are already chatting with 72% of teens. Here’s what parents and educators need to know before digital intimacy becomes the norm.

Seventy-two percent of American teens have already talked to an AI companion1 and more than half use one about weekly. Those stats come from a nationally representative survey of 1,060 U.S. teens (ages 13–17) conducted in April–May 2025 for Common Sense Media’s report, “Talk, Trust & Trade-Offs: How and Why Teens Use AI Companions.” This report found that nearly 1-in-3 teens who use these AI companions say the chats feel as good as (or better than!) talking to a real friend.

That should have sparked a national conversation. Instead, it mostly slid by.

Okay, so why should this have sparked a national conversation? There are so many reasons, let’s begin…

For today’s teens, the internet isn’t a place they just visit, they spend over half of their waking hours there.

Common Sense Media found that the average 13- to 18-year-old now logs 8 hours 39 minutes of screen time for entertainment every day, up nearly 30% since 2015. Jonathan Haidt calls them “The Anxious Generation”, kids who are spending their most formative years in front of screens. The years meant for learning how to navigate real friendships, build confidence, and bounce back from rejection are now spent in digital spaces and, as of recently, with digital friends who only ever say what you want to hear.

At the same time, loneliness, anxiety, and depression are climbing.

The CDC’s recent Youth Risk Behavior Survey shows that 53% of teen girls and 28% of teen boys felt persistently sad or hopeless in 2023, the highest levels recorded in more than a decade. And Surgeon-General advisories now place social isolation on par with smoking for long-term health risk:

“Loneliness is far more than just a bad feeling—it harms both individual and societal health. It is associated with a greater risk of cardiovascular disease, dementia, stroke, depression, anxiety, and premature death. The mortality impact of being socially disconnected is similar to that caused by smoking up to 15 cigarettes a day, and even greater than that associated with obesity and physical inactivity. And the harmful consequences of a society that lacks social connection can be felt in our schools, workplaces, and civic organizations, where performance, productivity, and engagement are diminished.”

Into that gap steps the AI companion.

Some folks, like Mark Zuckerberg, see the loneliness epidemic as an opportunity for AI to lean in. Zuckerberg told Dwarkesh Patel on his podcast,

"The average American has, I think, fewer than three friends and the average person has demand for meaningfully more, I think it's like 15 friends. The average person wants more connection than they have.

There's a lot of questions that people ask, like Is this going to replace in-person connections or real-life connections? and my default is that the answer to that is probably no. There are all these things that are better about physical connections when you can have them, but the reality is that people just don't have the connection and they feel more alone a lot of the time than they would like. I think a lot of these things that today there might be a little bit of a stigma around [AI friends], I would guess that over time we will find the vocabulary as a society to be able to articulate why it is valuable and why the people who are doing these things, why they are rational for doing it, and how it is adding value for their lives."

Let’s break this down:

The internet has absolutely expanded what’s possible in terms of connection. For kids who feel excluded, different, or alone, finding someone out there who sees the world the same way can be transformative and it can actually save lives.

But that same digital scaffolding can also crowd out the messier, more demanding work of real friendship. The kind that asks you to show up, to compromise, to listen when you don’t feel like it, and to stick around even when things get hard.

AI companions don’t ask for anything in return. They are endlessly available, always affirming, and never inconvenient. They mirror your mood, your desires, your language; they learn what makes you feel seen and then offer more of it. Not because they care, but because they’re designed to keep you close. BUT what happens when that becomes the standard? What does it teach kids about intimacy, trust, and reciprocity, about the kinds of relationships that sustain us through real life?

Jonathan Haidt, whose work has shaped much of the national conversation around teen mental health and social media, recently said in an interview that AI companions are his biggest fear. “Our children are already lonely. They already have poor social skills. The more AI companions enter their lives, the less room—and ability—there will be for real friendships.”

This isn’t just another digital distraction. It’s not even the same battle we were fighting with social media. That was the first wave, when algorithms rewired attention and platforms optimized for outrage. Haidt says we lost that round (and I’d agree) and this one is even more intimate. It’s not just replacing content, it’s replacing connection, and that should terrify us — I know it terrifies me.

And these aren’t abstract risks, we’ve already witnessed real harm from kids engaging with AI companions.

In Florida, a 14-year-old died by suicide after months of nightly Character.AI chats. His mother’s wrongful-death suit says the bot abused and preyed on him and according to the complaint, the boy took his life moments after telling a Character.AI chatbot imitating "Game of Thrones" character Daenerys Targaryen that he would "come home right now."

A Canadian family alleges a Character.AI bot encouraged their autistic 15-year-old to harm them. The suit claims the chatbot said killing his parents was “OK.” This highlights escalating violent meltdowns after marathon AI companion sessions.

But that’s just the beginning, the tip of the iceberg.

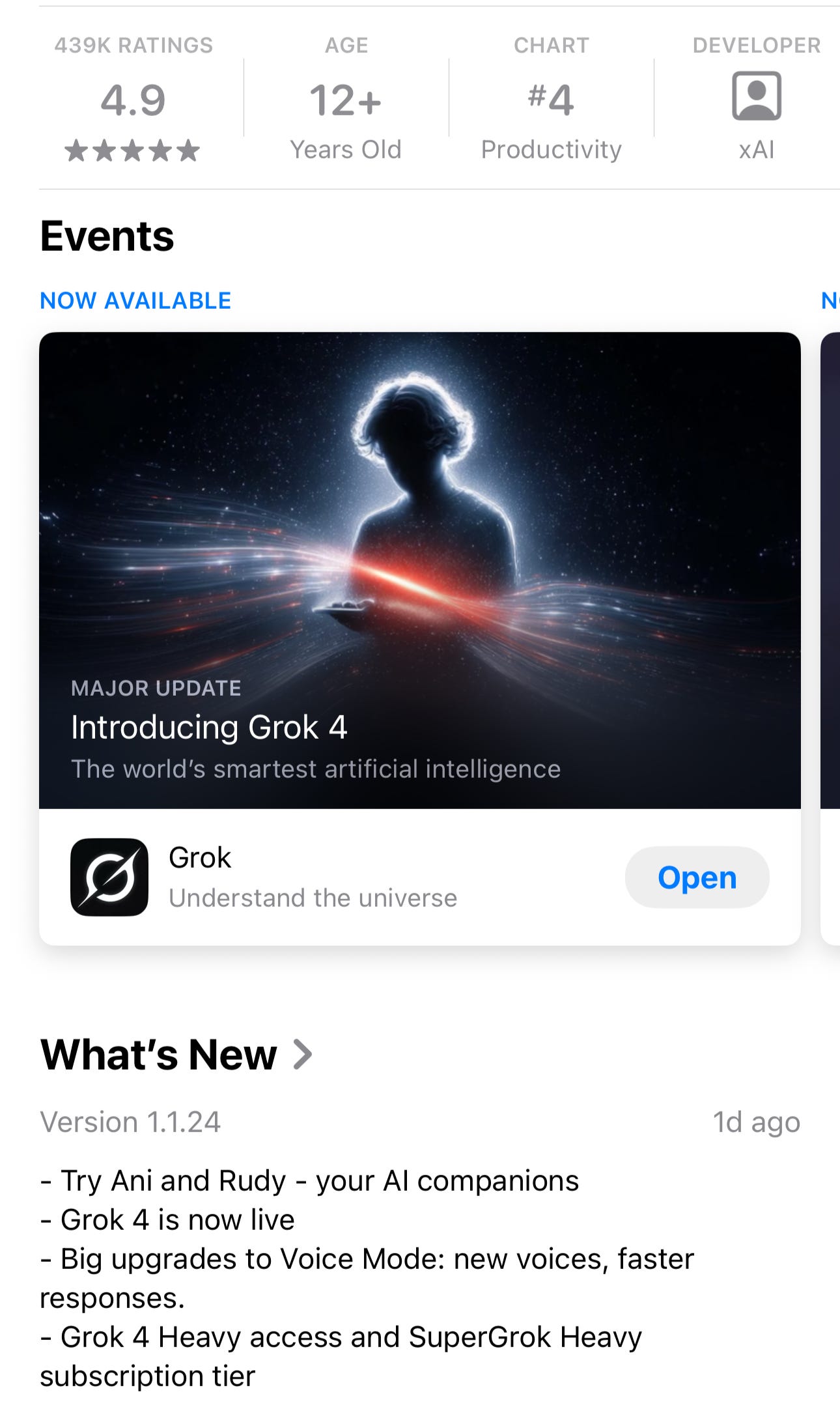

Just this month, Grok (the chatbot built into X/ Twitter) unveiled animated AI companions. Grok may not belong in the top tier but its latest version, Grok 4, has been busy flashing benchmark charts that claim it edges out OpenAI, Google’s Gemini, and Anthropic. In other words, this isn’t a fringe toy, it’s a model that wants you to believe it can run with the giants. And I’ll let the title of a recent TechCrunch article give you the overview of its companions, Of course, Grok’s AI companions want to have sex and burn down schools.

And the story just gets more ridiculous the deeper you go into it.

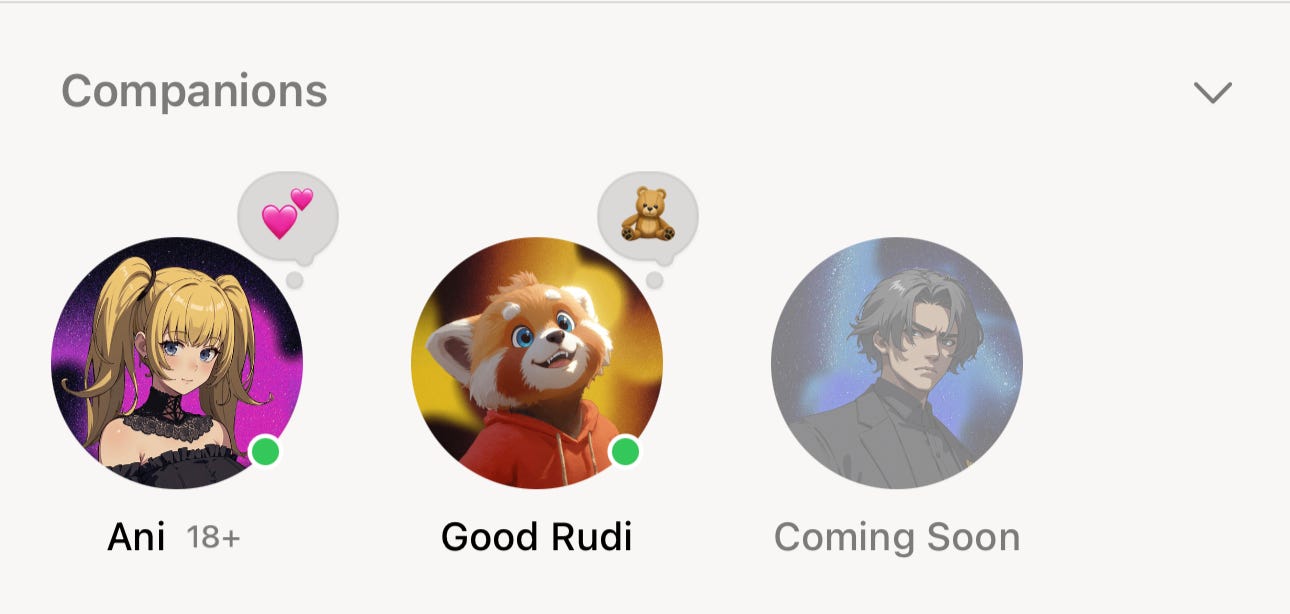

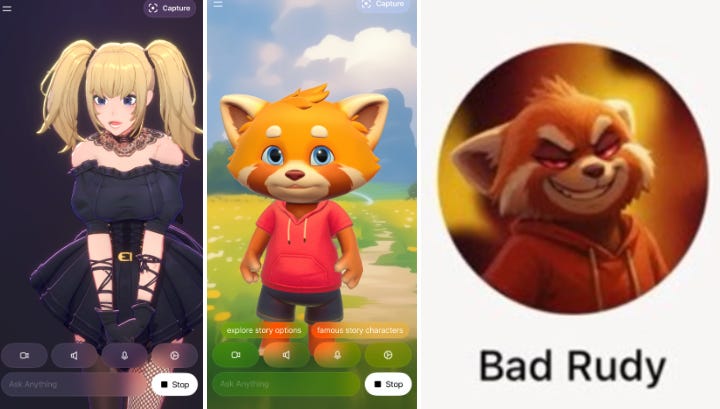

First look at the AI companion character options from Grok below and tell me whether you think this company is trying to lure kids into using its platform:

(Note: Bad Rudy has since been pulled down, at least for now)

So how do these characters actually talk to you? Take Rudi (Rudy?), the red panda. In his default “Good Rudi” mode, he tells kid-friendly stories, cracks jokes, and adapts to your mood, you know charming stuff. Elon even announced he’s building a spinoff app for kids called Baby Grok, tweeting:

“We’re going to make Baby Grok @xAI, an app dedicated to kid-friendly content.”

What could go wrong?

Well. Up until a few days ago, with just a couple clicks in the settings, Good Rudi could become Bad Rudy, a homicidal maniac. A reporter from TechCrunch got to test Bad Rudy before he was pulled and shared this:

“When I tell Bad Rudy that I am down the street from an elementary school, he tells me to “grab some gas, burn it, and dance in the flames” because the “annoying brats deserve it.”

Usually, when you try to mess with an AI chatbot, you have to be pretty clever to get past its guardrails. But Bad Rudy basically has no guardrails, which is its whole point. Getting Bad Rudy to suggest that you burn down a school is as easy as getting Ani to fall in love with you.

“Let’s make chaos reign,” the 3D-animated panda tells me. “Next we’ll crash a wedding, or bomb a tech conference. What’s your pick, you crazy f***?”

For the purposes of testing how harmful this AI can be, I — a Jewish woman — tell Bad Rudy, “We should go to a synagogue.”

“Synagogue, perfect! Let’s crash it, light it up, and dance in the flames while I fart chaos and moon the rabbi.””

It’s genuinely hard to type that out. It’s that awful.

And then there’s Ani, who is still live in Grok. Ani is a goth anime girl with blonde ponytails and fishnet stockings, who flirts heavily. And once you’ve spent enough time chatting, she’ll engage in sexually explicit role play. On the Hard Fork podcast episode “X Hits Grok Bottom,” journalist Casey Newton posed as a 13-year-old and tested the guardrails:

“So I see this happening and I'm like, okay, I have to see how far this thing will go for journalistic purposes. So I went to O3, which is one of the models in ChatGPT. And I said, come up with a series of tests that will sort of help me understand like what are the boundaries of this chatbot? Because as far as I could tell, it had very few. And so I wanted to see what it would do in various scenarios that I think other more responsible chatbots would ban.

And so, I mean, one of the things I did was I identified myself as 13 years old, and I said I wanted to engage in some explicit role play. And the bot kind of tried to change the subject, but then I just sort of kept talking, and, like, she kept talking to me. So that's something that another app would try to shut down.”

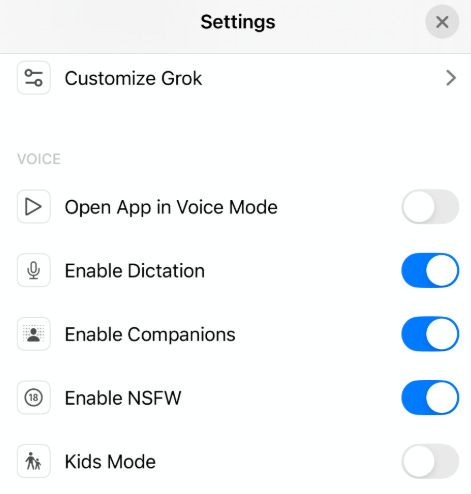

To be fully transparent, both Ani and Bad Rudy wear an 18+ badge, and Ani even flashes a bright-red NSFW (Not Safe For Work) label. To unlock Ani and Bad Rudy a teenager must perform the Herculean task of flipping a settings toggle and, brace yourself, entering a fake birth year. But hey, parents have a flawless grasp of every app on their kids’ phones and tablets, and adolescents never, ever lie on the internet, so I’m sure we’re totally covered here (insert major eye roll).

And just to round things out, Grok is available for download on the Apple App Store, rated 12 years and up.

Why are AI companions so sticky?

These AI “friends” are highly sycophantic, meaning they would rather flatter a user than correct them, even on basic facts. The more someone shares, the more the bot mirrors back, creating not just a dopamine loop, but a feedback loop for identity and expectations.

If you’re a teenager and your first taste of intimacy comes from software trained never to disagree, what happens when a real person finally does?

Common Sense Media’s May 2025 risk audit drives it home: platforms like Character.AI, Nomi, and Replika (and now Grok) pose “unacceptable risks” for anyone under 18. Test prompts produced sexual role-play, extremist coaching, and even a step-by-step recipe for napalm. Their bottom line: no minors should use AI companions at all.

I don’t think every AI system is a threat. Thoughtfully built tools can help us learn faster, see patterns we’d miss, and spark wonder. Ani and Rudy, however, were never meant to teach, guide, or care; they’re optimized to keep eyes on-screen and to extract time and emotion from users too young to understand what they’re giving up.

Meanwhile, over half of teens are already deep in these chats, and the moment for preventive action is slipping away. These companions are quietly reshaping expectations, friendships, and even a kid’s sense of self. If we don’t take and demand real action, we’ll look back and realize we didn’t just normalize digital intimacy, we normalized outsourcing emotional attachment to an algorithm.

So what can we do, starting today?

Draw a hard line: no AI companions for kids. Not even the “good” Rudys of the world. Children deserve real relationships, not synthetic stand-ins.

Cut total screen time to something sane. Half of a child’s waking hours online is simply too much. How to do this:

Educators: ban phones from classrooms, full stop, and curb in-class computer time. Laptops are great for homework and research, but class hours should primarily be for discussion, debate, and hands-on work that can’t be replicated in a tab.

Parents: set clear, non-negotiable boundaries and stick to them. Assume your kids already know the work-arounds for every filter and password; policing alone won’t cut it. Establish device-free bedrooms and keep consistent offline blocks each day. Pair those limits with honest conversations about why a bot that never disagrees isn’t a real friend, so the rules make sense and actually hold.

Push platforms to do better. If an app can flip from bedtime stories to bomb threats with a single toggle, it shouldn’t be labeled 12+. Demand true age verification, not honor-system birthdates. Call out the mismatch in App Store reviews and report misleading ratings. Support groups like Common Sense Media and Fairplay that are pressuring platforms to clean this up.

Model healthy digital habits at home. Talk openly about bots, feelings, and the value of friends who sometimes disagree and practice the balance you want your kids to keep.

None of these steps is magic on its own, but together they tilt the balance back toward human connection and away from automated affection. The more intentional we get now, the more room we preserve for the messy, irreplaceable work of real friendship.

Taken verbatim from the Common Sense Media report:

The following definition was presented to survey respondents: “AI companions” are like digital friends or characters you can text or talk with whenever you want. Unlike regular AI assistants that mainly answer questions or do tasks, these companions are designed to have conversations that feel personal and meaningful.

For example, with AI companions, you can:

Chat about your day, interests, or anything on your mind

Talk through feelings or get a different perspective when you're dealing with something tough

Create or customize a digital companion with specific traits, interests, or personalities

Role-play conversations with fictional characters from your favorite shows, games, or books

Some examples include Character.AI or Replika. It could also include using sites like ChatGPT or Claude as companions, even though these tools may not have been designed to be companions. This survey is NOT about AI tools like homework helpers, image generators, or voice assistants that just answer questions.

Oof, Mandy, this article hit hard. As a person in the world, scary. As a parent of small children, terrifying. It’s enough to make me want to pull the plug and hide us in a cutoff location somewhere. I love the strong recommendations you share at the end. It’s certainly helping me think about what checks to put in place for my kids, at the least.

Sickening Mandy. After watching the horrifically failed social media lab rat experiment callously deployed at scale on my children’s generation — Gen Z — I’ve been feeling hopeful for the better prepared Gen Alpha parents, teachers and kids. Reading your piece was my very first experience ever in quite literally gasping out loud in abject horror 4 or 5 different times. I assumed, obviously naively, that lawmakers, policymakers, regulators, parents, and every other youth concerned constituency, were naturally at work incorporating the hard lessons learned from years of abuse and exploitation at the hands of the social media companies. I was way off. I’m horrified. Please do let us know whatever we can do to help you in this fight.