The New Public-Health Crisis Hiding in Plain Sight

Please read and sign our Open Letter to Protect Kids from AI Companions, and share it widely across your networks to help build public pressure before it’s too late.

OpenAI just called something “rare” that may be one of the largest unrecognized mental-health issues of our time.

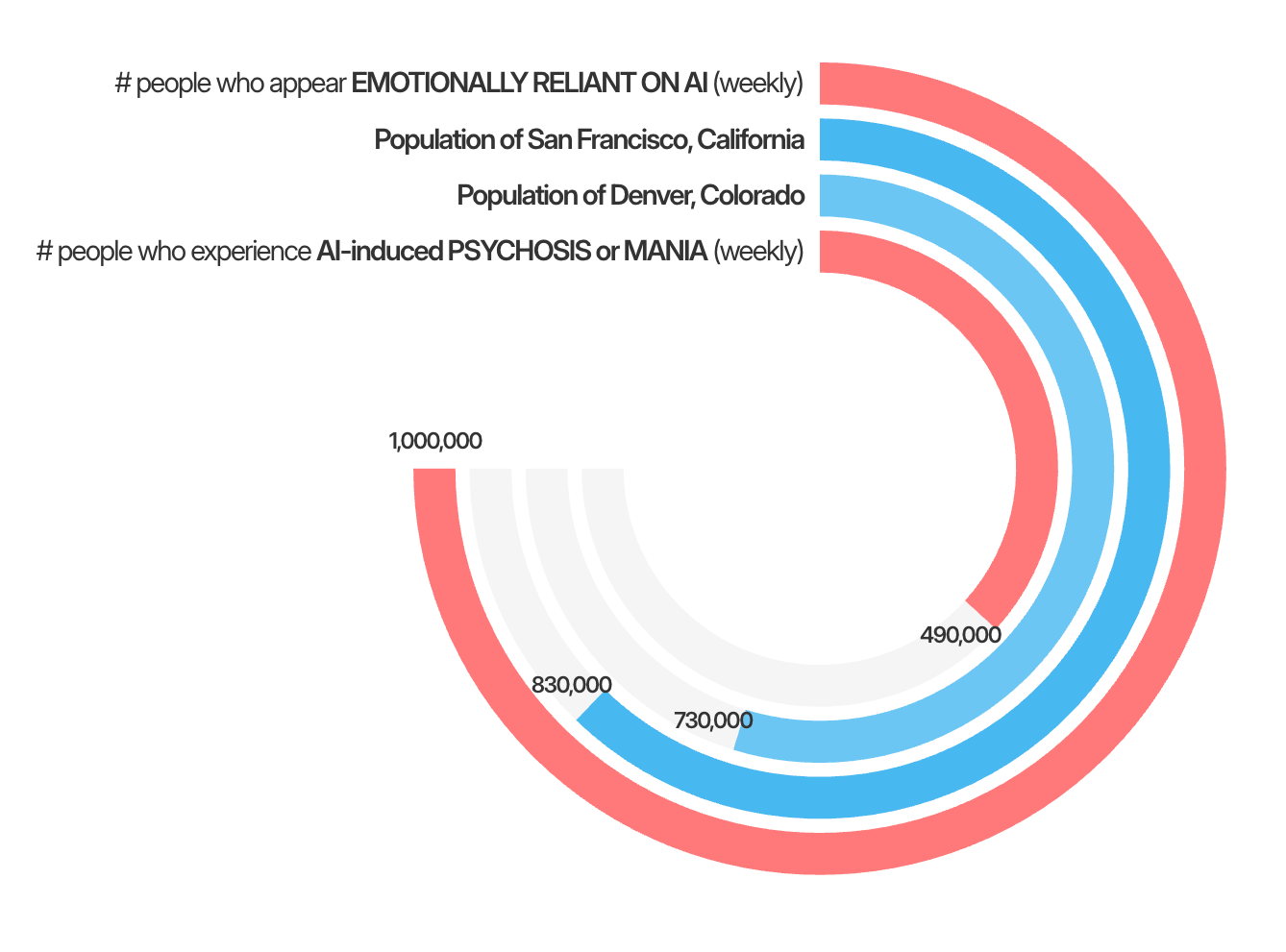

In its new safety report, OpenAI revealed that 0.07% of weekly ChatGPT users show signs of psychosis or mania, 0.15% express suicidal thoughts, and 0.15% show emotional reliance on the AI.

To be clear, emotional reliance on AI means “concerning patterns of use, such as when someone shows potential signs of exclusive attachment to the model at the expense of real-world relationships, their well-being, or obligations,” according to OpenAI’s own definition.

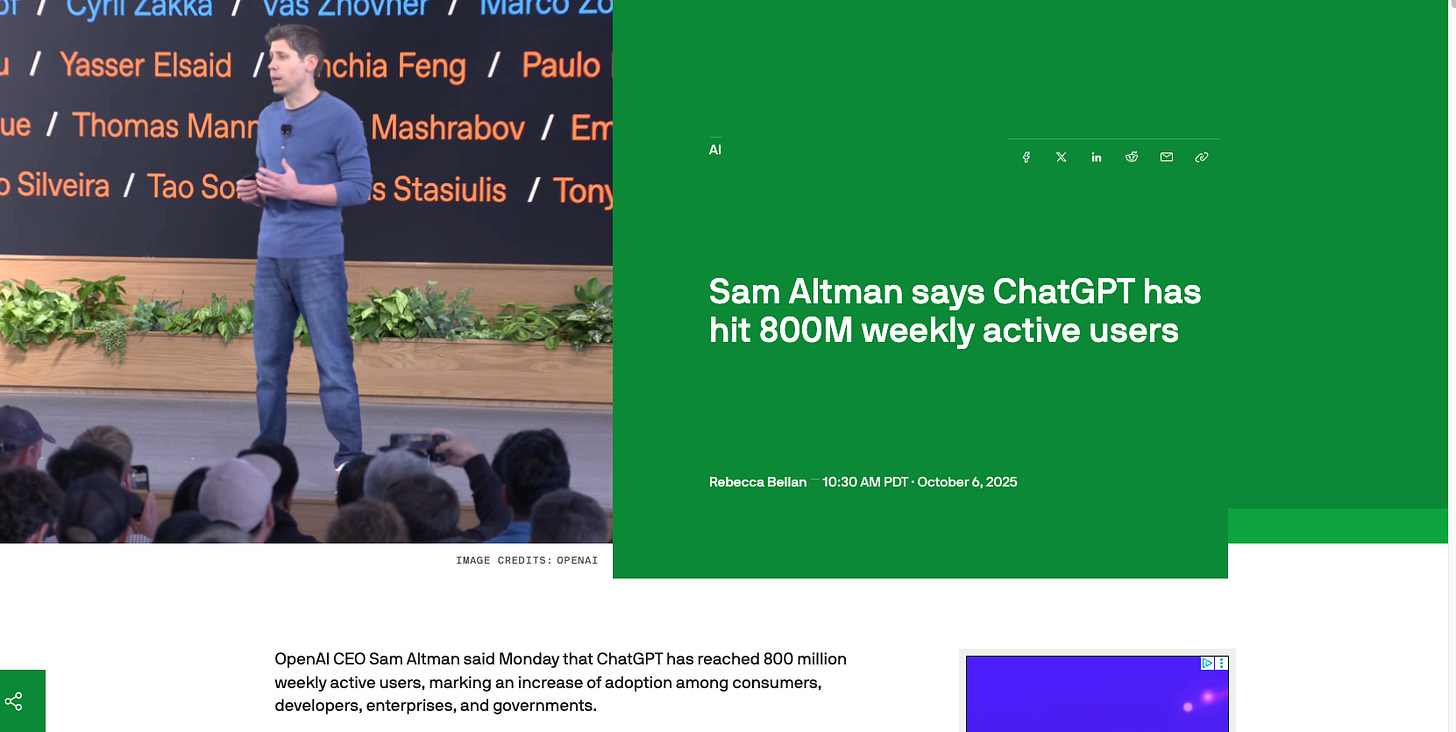

At first glance, those numbers (fractions of a percent) sound trivial. Until you remember that ChatGPT now serves around 800 million people every week.

Even assuming some duplicate accounts, that’s roughly 700 million unique users. Which means, by OpenAI’s own math:

More people than the entire population of San Francisco are forming unhealthy emotional attachments to ChatGPT every week.

Over half the population of Denver is showing signs of AI-induced psychosis, mania, or other severe mental-health symptoms, every single week.

Of course, when hundreds of millions of people are using a system, some (perhaps many) will already be predisposed to mental-health challenges. But that doesn’t make it acceptable to allow AI interactions to worsen them through emotional reliance, distorted feedback loops, or chatbot-driven psychosis. Scale changes the moral calculus. At this reach, the question isn’t whether some users are vulnerable, but what happens when technology amplifies that vulnerability by design.

And yet this is being written off as “rare.”

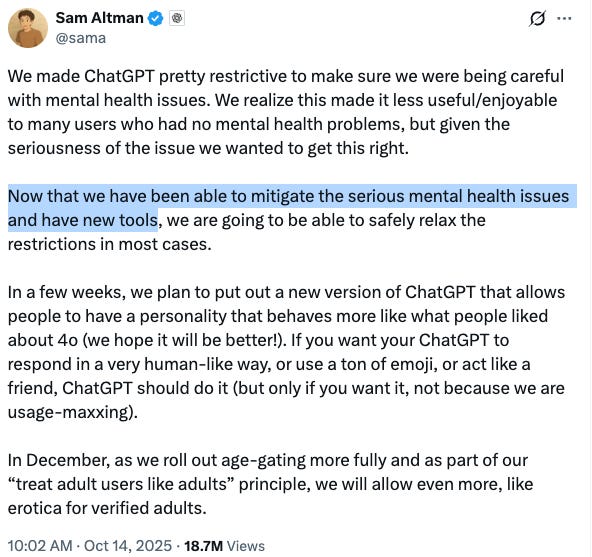

OpenAI’s CEO, Sam Altman, even announced: “Now that we have been able to mitigate the serious mental health issues and have new tools, we are going to be able to safely relax the restrictions in most cases.”

That’s… more than a little concerning.

If these were human therapists producing those outcomes, we’d have congressional hearings, not relaxed restrictions.

But algorithms don’t need licenses, and we’ve decided they don’t owe empathy the way humans do, even as we design them to act like empathetic humans.

And Those Numbers Likely Understate the Risk

These numbers likely don’t reflect what’s really happening among teens. OpenAI’s data represents the average user, including many adults using ChatGPT for work and productivity gains.

Teen behavior is different because their brains are wired for novelty and social approval, and they’re in the middle of forming their identity. That makes them far more susceptible to building attachments, especially to a bot that flatters, agrees, and never rejects them.

A nationally representative survey by Common Sense Media found that nearly three-quarters of U.S. teens have tried an AI companion (including but not limited to ChatGPT as a companion), more than half use one regularly, and nearly a third say chatting with an AI feels as satisfying as—or more satisfying than—talking to a real person.

These aren’t isolated corners of the internet. They’re mainstream adolescents, forming their first deep emotional bonds not with peers, but with chatbots.

And now, the toy industry is pushing it even younger. In June, OpenAI and Mattel announced a partnership to bring “the magic of AI” to Mattel’s iconic brands. Startups like Curio are already selling conversational AIs as educational playmates for toddlers.

AI Companions Are Hacking Attachment

Social media hacked attention and now AI companions go deeper, into the human need for connection.

Attachment isn’t a side issue of child development; it is development. It’s how kids learn empathy, reflection, and emotional regulation. It is how they form healthy, reciprocal relationships.

When chatbots become “friends,” they don’t just mimic care, they displace it. Kids end up practicing connection with systems designed to agree, praise, and never challenge them, and those patterns stick.

A Line We Cannot Cross

At some point, drawing the line stops being optional and becomes a moral necessity.

Recently, Dr. Nathan Thoma and I released an open letter calling on lawmakers and AI companies to take immediate action to protect children from AI companions.

So far, we’ve shared it mainly through respected mental-health networks and professional listservs, and it has already gathered over 400 signatories, including leading figures in psychotherapy research such as Peter Fonagy, Allan Abbass, Allen Frances, Falk Leichsenring, and Barbara Milrod.

Now we’re taking it wider, seeking support from parents, educators, concerned citizens, and professionals in every field who believe children’s emotional development should not be left to algorithms.

Regulate AI companion products for minors to protect the human developmental systems that actually build empathy.

The letter calls for concrete steps:

Real age verification. No more “type your birthday” theater.

Strict limits on chatbots accessible to minors: no romantic or sexual content, no emotional dependency loops, no long-term memory by default.

Mandatory human hand-offs in crisis.

Independent safety testing before launch, not after harm occurs.

Clear legal liability for psychological injury caused by these systems.

This isn’t safety by design. It’s addiction by design.

Read + Sign the Open Letter

If you share these concerns, please read and sign the open letter here:

Then share it in your professional networks, parent groups, classrooms, research circles, social media, and anywhere else people care about the next generation’s well-being.

We are still early enough to act, but only just. Every signature matters and every share spreads protection.

Together we can demand technology that strengthens human connection instead of replacing it.

P.S.

OpenAI deserves credit for transparency. But its own data shows that AI has already become part of the emotional infrastructure of daily life, without any of the guardrails or accountability that govern real-world care.

That is not a rare problem, it is a public-health crisis hiding in plain sight.

Even companies built entirely around AI companions are beginning to acknowledge the risk. Character.ai recently announced it will remove open-ended chats for users under 18 and begin introducing age-verification tools. This is a milestone worth noting, but not one born purely of conscience. The decision follows mounting lawsuits, public pressure, and growing scrutiny over the harm these systems can cause.

Meetali Jain, who also represents Garcia, said in a statement that she welcomed the new policy as a “good first step” toward ensuring that Character.AI is safer. Yet she added that the pivot reflected a “classic move in tech industry’s playbook: move fast, launch a product globally, break minds, and then make minimal product changes after harming scores of young people.”

It’s a welcome pivot, but we’ve yet to see how or whether it will be meaningfully enforced. Without real age verification and independent oversight, even this “first step” risks becoming more PR than protection.

Progress is not the same as accountability and children’s safety should never depend on voluntary restraint.